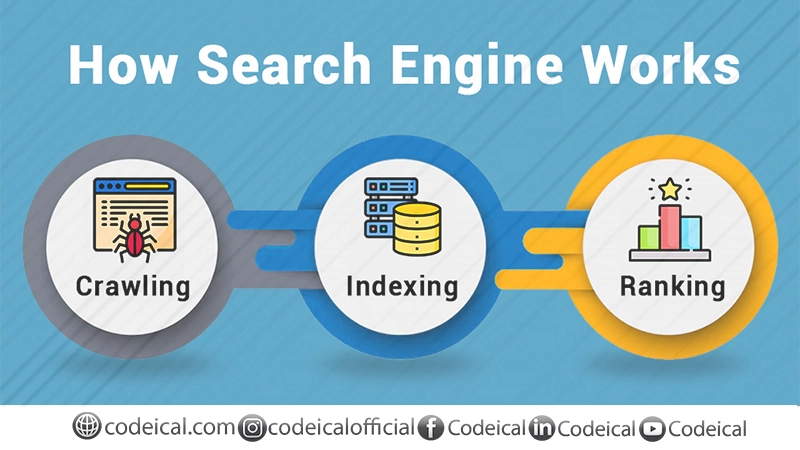

Explained: 4 Fundamental Steps of Technical SEO for Enhancing Your Website’s Performance – Crawling, Rendering, Indexing, and Ranking.

Introduction

In the realm of Search Engine Optimization (SEO), having a grasp of the fundamental stages of search engines’ processes is vital to achieve favorable website rankings. Nonetheless, numerous practitioners frequently mix up and combine these stages, resulting in misunderstandings and ineffective SEO approaches. In this article, we will explore the four fundamental phases of technical SEO: Crawling, Rendering, Indexing, and Ranking. We will shed light on their importance and how they influence website performance in search results.

Why Knowing The Difference Matters

Before we proceed to explore the intricacies of each step, it is essential to highlight the significance of grasping the distinctions between them. Consider a legal scenario where SEO matters come under scrutiny, and the opposing party’s so-called “expert” commits fundamental errors while describing Google’s procedures. Such inaccuracies have the potential to render the expert’s findings inadmissible, thereby impacting the final verdict of the case.

Likewise, in the realm of SEO, confusing crawling with indexing or misconstruing the ranking process can lead to suboptimal decisions in website optimization, adversely affecting its performance. Therefore, comprehending these steps goes beyond mere semantics; it stands as a critical factor in devising successful SEO strategies.

In conclusion, it is crucial to stress the necessity of understanding the dissimilarities between the steps, as demonstrated through the legal case analogy and the ramifications of SEO misunderstandings. Such awareness becomes the linchpin of effective SEO approaches, ensuring improved website performance and better overall outcomes.

The 4 Essential Steps Of Technical SEO

• Crawling

Crawling serves as the primary initial phase in the search engine’s operational flow. Analogous to how you navigate the web by clicking on links, search engines utilize automated bots, commonly referred to as “spiders,” which traverse web pages. These bots systematically gather duplicates of the pages they visit and diligently follow hyperlinks to other interconnected pages, thereby building an expansive and intricate web of data. Although the concept of crawling may appear simple, its execution involves intricate interactions with diverse web server systems, content management systems, and a plethora of website customizations.

The crawling process is not without its challenges, as certain issues can emerge. For instance, the presence of robots.txt files may obstruct the crawling process, leading to the unfortunate consequence of rendering the content inaccessible to search engines. Moreover, larger websites may encounter obstacles associated with what is known as a “crawl budget.” This refers to the scenario wherein search engines prioritize crawling some pages over others, influenced by the pages’ ranking significance and weight in the digital landscape.

The careful execution of crawling plays a vital role in ensuring comprehensive indexing and effective retrieval of information for users. Search engines diligently work through the complexities to provide us with the most relevant and accurate search results, all achieved through the intricate process of crawling and indexing vast amounts of data spread across the world wide Web. If you want to learn CRAWLING in detail CLICK HERE

• Rendering

Once a webpage has been crawled, the search engine proceeds to the rendering stage. During this phase, the gathered HTML, JavaScript, and CSS data are processed to create a representation of how the page will be presented to users on either desktop or mobile devices. Rendering holds great significance in comprehending how the webpage’s content is exhibited in its context, particularly for pages that heavily rely on JavaScript or AJAX. The presence of intricate JavaScript elements can present challenges for search engines, impacting their ability to function accurately.

Google employs the “Rendertron” rendering engine, while Microsoft Bing uses Microsoft Edge, both rendering webpages like Googlebot. Nevertheless, rendering can become problematic when crucial content heavily depends on JavaScript, leading to pages becoming invisible to search engines and adversely affecting their ranking capability. This issue can arise due to the difficulties search engines face in processing and understanding JavaScript-reliant content.

In such scenarios, search engine optimization (SEO) techniques may be employed to improve visibility and ranking, such as using server-side rendering, creating a static HTML version of the page, or implementing progressive enhancement strategies. By optimizing the rendering process and ensuring that essential content is accessible without relying solely on JavaScript execution, webpage creators can enhance their chances of better rankings and increased visibility on search engine result pages. Hence, rendering plays a critical role in determining the discoverability and visibility of web content, and its proper optimization is crucial for effective SEO practices. To know about RENDERING in detail CLICK HERE

• Indexing

After a page has been crawled and rendered by the search engine, it undergoes further processing to determine its eligibility for inclusion in the search engine’s index. The index serves as a repository of keywords and keyword sequences associated with web pages, functioning like an index found in a book. However, not every crawled page makes it into the index. Several reasons account for this, including the presence of “no index” directives in robots meta tags, X-Robots-Tag HTTP header instructions, or pages being judged as low-quality content.

The identification and resolution of indexing issues play a critical role in SEO since pages that are not indexed face significant challenges in effectively ranking in search results. Ensuring proper indexing is vital for a website’s visibility and organic search performance. Websites that possess insufficient collective PageRank or have limited crawl budget may encounter additional obstacles in achieving successful indexing.

In summary, after the crawling and rendering process, the search engine analyzes pages to decide if they should be included in its index, which contains essential keywords and sequences. Not all pages make it into the index due to various reasons, including directives and low-quality content. Addressing indexing issues is paramount in SEO as unindexed pages struggle to rank effectively in search results. Websites facing low PageRank or crawl budget limitations may also encounter challenges with indexing. More Details

• Ranking

The ranking stage in SEO is undoubtedly the most critical and extensively researched aspect. It is the phase where search engines determine the order in which webpages will be displayed in search results for a given keyword. This process is carried out through a complex “algorithm,” and Google, for example, takes into account a whopping 200 ranking factors.

One of Google’s earliest ranking algorithms was PageRank, which treated links as votes to determine the relative ranking strength of a page compared to others. However, over time, the algorithm has undergone significant evolution, becoming more sophisticated in its assessment of links and various other ranking signals. The ranking process now involves an intricate interplay of multiple factors and vectors that significantly influence a webpage’s position in search results.

Search engine optimization experts continuously strive to unravel the intricacies of these algorithms and stay up-to-date with the latest changes and trends. The ongoing research in this field aims to understand how to optimize web content effectively to achieve higher rankings and visibility in search engine results.

The ranking stage holds immense importance for website owners, businesses, and marketers, as higher rankings can lead to increased organic traffic, better visibility, and a competitive edge over competitors. It is a highly dynamic and ever-evolving aspect of SEO, as search engines frequently update their algorithms to provide more relevant and accurate search results to users.

In addition to links, search engines now consider a wide range of factors when determining rankings, such as the relevance of the content, the use of keywords, website performance, user experience, social signals, and even the website’s mobile-friendliness. Each of these factors plays a role in shaping the final ranking of a webpage in search results.

As the competition for online visibility intensifies, understanding and mastering the ranking process has become an essential skill for businesses and website owners. SEO professionals must keep themselves updated with the latest algorithm changes and adapt their strategies accordingly to maintain or improve their search engine rankings.

In conclusion, the ranking stage in SEO is a complex and ever-evolving process, driven by sophisticated algorithms that take into account numerous factors to determine the position of webpages in search results. As the landscape of SEO continues to change, staying informed and proactive is crucial for success in the digital world.

Conclusion

Comprehending the four fundamental phases of technical SEO, namely Crawling, Rendering, Indexing, and Ranking, is of utmost importance for individuals engaged in website optimization. Mistaking one phase for another or neglecting to address issues in any of these steps can have a substantial impact on a site’s performance in search results.

By gaining a deep understanding of how search engines operate and meticulously tackling the challenges specific to each stage, SEO practitioners can devise highly effective strategies to enhance website visibility and elevate its ranking in search engine results pages. Possessing this knowledge empowers website owners to make informed decisions and optimize their sites to their fullest potential, ensuring maximum exposure and success on the web.

FAQs

- Why is it important to comprehend the distinction between crawling and indexing? Understanding the dissimilarity between crawling and indexing holds immense significance for effective SEO. Crawling involves the process wherein search engine bots visit webpages and gather their content, while indexing determines whether these pages will be stored in the search engine’s index for retrieval during searches. Being aware of this dissimilarity aids in identifying and resolving issues pertaining to a website’s visibility and ranking.

- How does rendering impact a website’s SEO performance? Rendering refers to the procedure of generating a webpage’s appearance for users on various devices. In the case of pages heavily reliant on JavaScript, rendering becomes crucial to ensure search engines can access and view the entire content. Pages that are invisible or poorly rendered can negatively influence a site’s search engine rankings.

- What are the primary challenges during the ranking stage of search engine optimization? The ranking stage involves intricate algorithms that take into account numerous factors to determine the order of search results. Websites must deal with over 200 ranking factors, making it essential to prioritize quality content, relevant keywords, and other SEO best practices to achieve higher rankings.

- Can websites manage which pages get indexed by search engines? Yes, websites can control which pages get indexed by utilizing “noindex” directives in robots.txt files or X-Robots-Tag HTTP header instructions. Moreover, canonical tags can indicate the preferred version of a page for indexing. Properly managing indexing instructions helps ensure that search engines only index the most relevant and valuable pages.

- How can websites enhance their crawl budget to ensure comprehensive indexing? For larger websites or those with frequently changing content, optimizing the crawl budget is crucial. Ensuring fast load times, minimizing server errors, and prioritizing high-value pages can assist search engine bots in crawling more pages efficiently, leading to better indexing and improved SEO performance.

If you want to learn about ON-PAGE SEO CLICK HERE